views

In the current times, machine learning and deep learning are being used in many industries, and it has shown remarkable improvements in computer vision. But in the last few years, many of the real-world applications have been demanding real-time, on-device processing capabilities. For this, there was a need for models that can perform well in larger sizes as well. So, deep learning models were compatible with that, but were consuming more space. So there was a need for a model that an handle both small and big. That’s where the AI Compression techniques come in.

Here we have discussed in detail the model compression techniques. So, if you are looking to become an AI developer, then consider enrolling in the Deep Learning Course. You can learn the fundamental concepts of AI by taking this course. So let’s begin discussing these techniques:

Popular AI Compression Techniques:

Here we have discussed some of the popular AI compression techniques. So if you have taken Deep Learning Training in Noida, then you can take advantage of practical training where you can implement your knowledge.

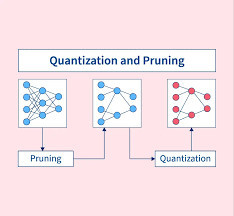

1. Pruning Technique

Pruning is a way to make deep neural networks (DNNs) smaller and faster. In a trained network, many weights (or parameters) don’t help much with the final result. After training, we can remove these unimportant weights. This reduces the size of the network without affecting its accuracy much.

2. Quantization Technique

Quantization makes a neural network smaller by using fewer bits to store each weight. For example, instead of using 32 bits, we can use 16, 8, 4, or even 1 bit to store a weight. This can greatly reduce the network size.

There are two types of quantization:

● Post-training quantization – applied after training is done.

● Quantization-aware training – applied during training.

3. Knowledge Distillation Technique

In knowledge distillation, a large and powerful model is first trained on a dataset. This model is called the teacher. Then, we use the teacher model to help train a smaller model, called the student. Here, the main goal for the students is to learn from the teacher and perform well. The size of the task doesn’t matter at all. Well, it is quite different from transfer learning. This is why we use the same model and just change some of the layers for a new task. Right now, knowledge distillation is mostly used for classification tasks (like recognizing objects in images).

It’s much harder to use this method for more complex tasks like object detection (finding where objects are in an image) and segmentation (labeling each pixel of an image). Training a smaller student network from a larger teacher network in these cases is more challenging.

4. Low-Rank Factorization Technique

Low-rank factorization is a method used to make deep neural networks smaller by breaking large matrices into smaller ones. These matrices contain the network’s weights.

For example, a big matrix with dimensions m x n and rank r can be split into smaller parts. This reduces memory use in dense layers and speeds up computations in convolutional layers.

Apart from this, if you have taken a Machine Learning Course in Noida will help you to apply your knowledge to pre-trained models as a post-processing step to reduce model size. Also, this will give you the hands-on experience needed to master these concepts.

Conclusion:

From the above discussion, it can be said that in the last few years, deep neural network (DNN) compression and speed-up methods have improved a lot. Model compression techniques play an important role in making deep learning models more efficient and practical for real-world applications, especially on devices with limited resources. As AI continues to grow across industries, the demand for lightweight, fast, and accurate models is higher than ever.

Comments

0 comment